Receba nossas newsletters de graça

Leia esta reportagem em português

Groups that encourage child and adolescent sexual abuse and exploitation are still relatively easily found on Facebook in Portuguese, even after Meta reiterated combating child pornography as one of its priorities.

Núcleo’s investigation found at least seven public Facebook groups — accessible to any user and with thousands of members — that could be used for the solicitation of minors and that displayed sexually suggestive content referring to children and teenagers.

These groups showed images of minors in a sexualized context, sometimes in their underwear, and housed comments from adults such as “hot, delicious, beautiful,” as well as polls about relationships with minors and requests for private conversations.

After being contacted by Núcleo, Facebook took down the seven groups.

THIS ✷ MATTERS ✷ BECAUSE...

Child sexual exploitation and abuse are crimes of the utmost gravity, even when they occur in the virtual sphere

Beyond the solicitation of minors, it also raises alarm about the improper use of children's and teenagers' images online

It makes it evident that even the world's largest social networking company still can't extinguish such content from its main platform

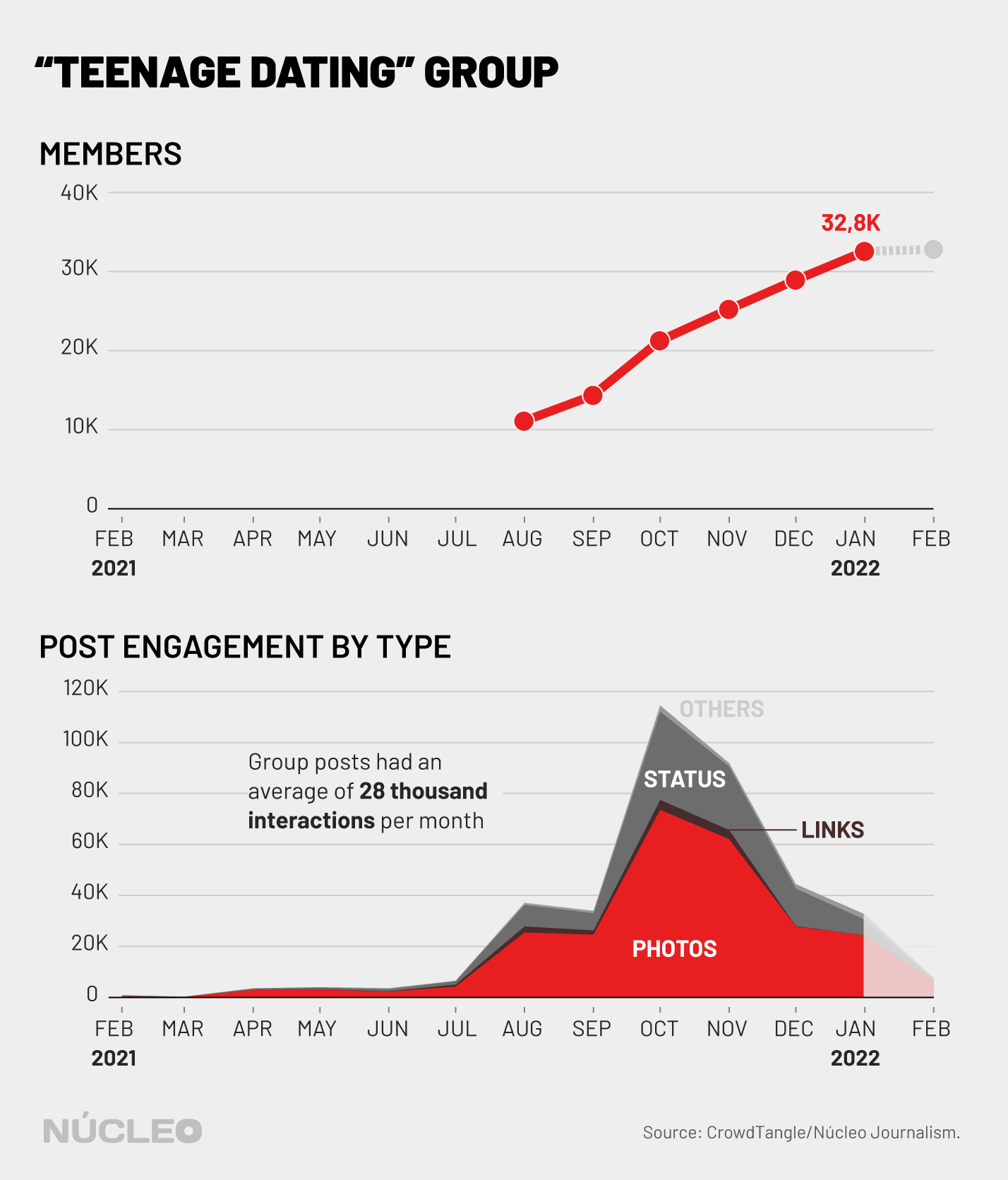

The two largest and most flagrant communities — Só Novinhas (Only Young Chicks) and Namoro Adolescente (Teenage Dating) — totaled almost 40,000 members. In 12 months, they registered 386,000 interactions in 21,000 posts. This report only publicizes the groups’ names because they were taken off the platform.

The existence and continuity of public groups of this scale, in which content of this type circulates, suggests that, despite being a stated priority of the company, Facebook's moderation systems are still insufficient in the definitive fight against child sexual abuse on its main platform, at least in Brazil and in Portuguese.

It is worth noting that no explicit images of child pornography were found, but it is clear from the context that this is sexually suggestive content involving minors’ images or subjects.

To provide evidence of the investigation, Núcleo made the decision to publish an edited version of the images found in the group, always prioritizing the privacy of the children and teenagers, and removing any possibility of recognition, following the standard adopted by The New York Times in 2019, which was based on a format suggested by the Canadian Center for Child Protection.

Even with all editing efforts, Núcleo has hidden these images in collapsible buttons on the site. Click with discretion.

The evidence was submitted to Facebook in full on Feb. 11, 2022, a day on which all seven groups were still active on the platform.

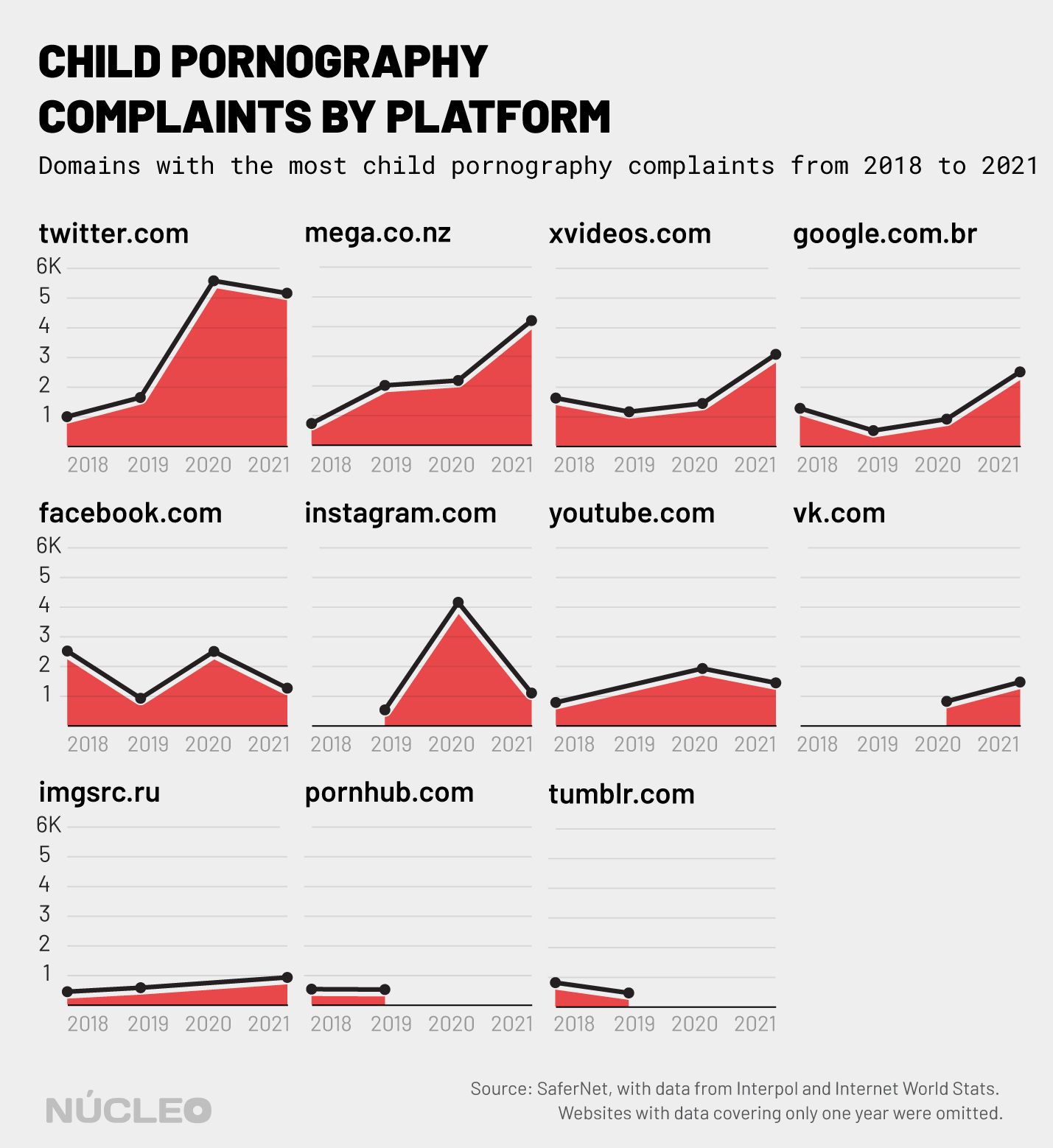

Facebook is one of the platforms monitored by SaferNet (a non-governmental organization that promotes human rights on the Internet) with the most reports of child pornography in recent years.

In a statement sent through its press office, Facebook/Meta said:

"We have removed the groups pointed out by the Núcelo report. We do not allow our platforms to be used to endanger children. Our efforts to combat child exploitation focus on preventing abuse, detecting and reporting content that violates our policies, as well as working with experts and authorities to keep children safe. We have more than 40,000 people working in safety-related areas, and we use a combination of community reporting, technology, and human review to identify and remove content that violates our policies. We constantly cooperate with authorities on investigations, providing information under applicable law."

EASY TO FIND

It was not necessary to dig deep and dive into the depths of the social network to find groups of this kind. Initially, our report was investigating another unrelated matter but a little detour was enough to find content suggestive of sexual exploitation of minors.

One of the groups viewed by Núcleo — removed by Facebook after the press office was contacted — had as a cover photo for almost a year two girls dressed in bathing suits on a beach, one with her face completely visible and the other, due to the cropping of the photo, with only half of her face visible.

The two are unquestionably minors.

Edited image (discretion recommended)

"What beautiful little babies they are," wrote one user, among other more alarming comments that will not be replicated.

Some criticized the photo selection, saying that these were children and that it was pedophilia. The words pedophilia and pedophile are repeated several times, but apparently this was not enough for Facebook to be alerted and take action. Everything remained online.

It is important to point out that content and groups of this kind cannot necessarily be characterized as pedophilia, as explained to Núcleo by lawyer Ana Cifali, from Alana Institute, which is part of the Coalizão Direitos na Rede (Coalition for Rights on the Net).

"The legislation doesn't use the term pedophilia, which is more closely linked to a mental health issue, to people who feel attracted to children and teenagers, when in fact sexual abuse and exploitation of children also happen for other reasons," she explained.

"Not every person who abuses a child and teenager is a pedophile, but there is the whole issue of power relations between children and adults."

Regarding the content seen by Núcleo, the most appropriate is to talk about sexual abuse, which, according to Cifali, "is every action that uses the child or teenager for sexual purposes, whether for penetration or any other libidinous act, including by electronic means.”

The report found many "teen dating" groups, some that seem aimed at specific age groups.

But even in groups such as this, which are, in theory, aimed at a younger audience, some members are adults and it is even possible that there are adults posing as kids in fake profiles, a tactic widely used by pedophiles. Even if there is no explicit or sexually suggestive content, this type of practice — known as soliciting or child grooming — is problematic in itself.

The Child and Adolescent Statute (ECA) deals with this type of contact in Article 241-D, which determines a sentence of one to three years in prison for anyone who "solicits, harasses, instigates, or forces, by any means of communication, a child to commit libidinous acts.”

PRIORITY

According to its rules, Facebook does not allow content that "sexually exploits or endangers children."

The company also says that it removes images of naked children even when posted by parents because of the "potential for abuse by others and to help avoid the possibility of other people reusing or misappropriating the images."

Brazilian legislation prohibits, both through the ECA and the Civil Code, the use of children's images without authorization. The ECA speaks of the "inviolability of the physical, psychological, and moral integrity of children and adolescents, including the preservation of their image, identity, autonomy, values, ideas and beliefs, personal spaces and objects," and establishes that it is everyone's duty to care for this integrity.

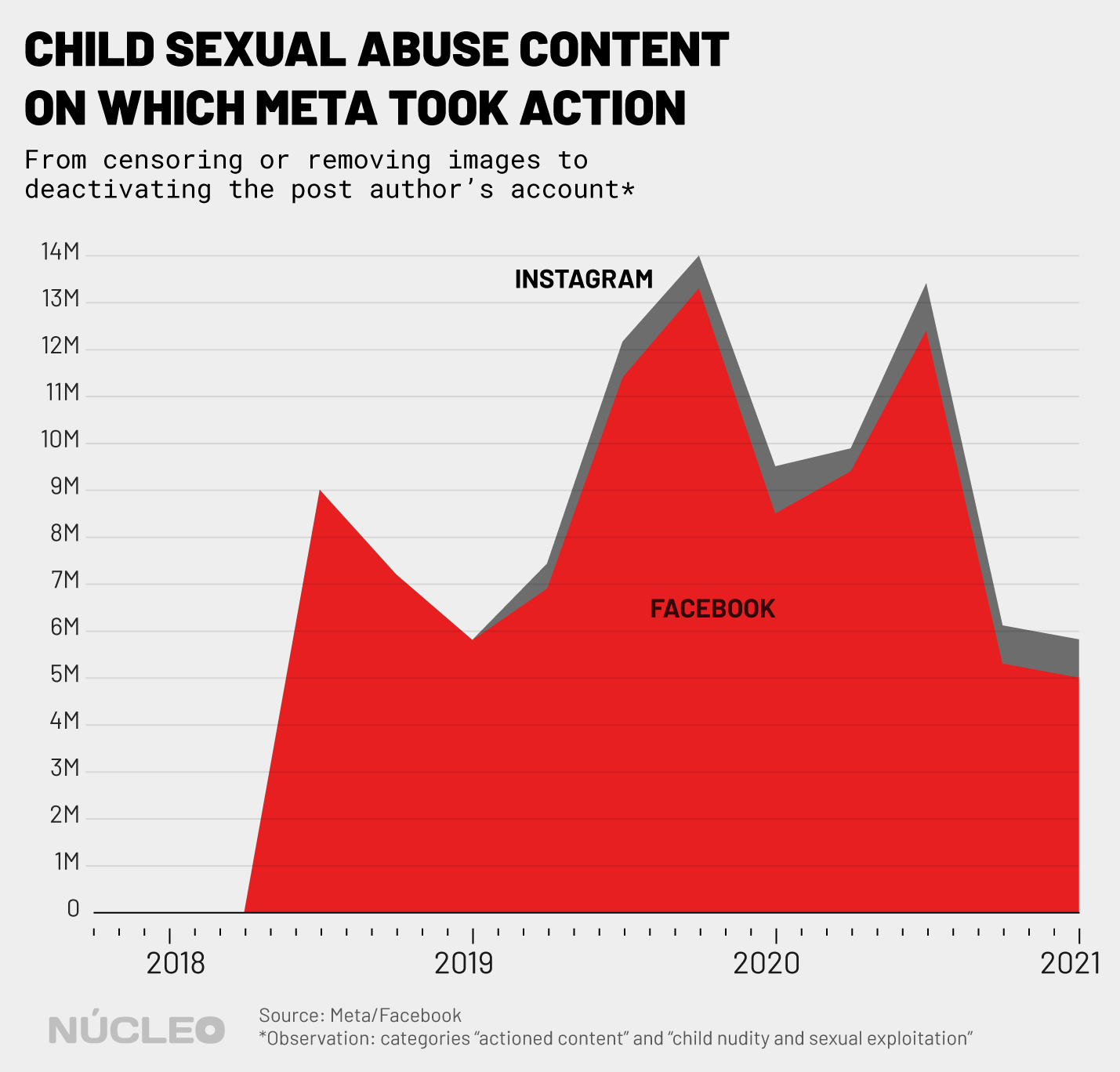

In 2018, Meta introduced new technology to combat such content on Facebook. In addition to the photo-matching system that was already in use, the company started using artificial intelligence and machine learning to "proactively detect child nudity and previously unknown child exploitative content when it’s uploaded."

As part of the protocol against child abuse on the platform, the company also reports identified cases to the National Center for Missing and Exploited Children (NCMEC), in compliance with the law. Based in the United States, this nonprofit entity communicates with the competent authorities in each country.

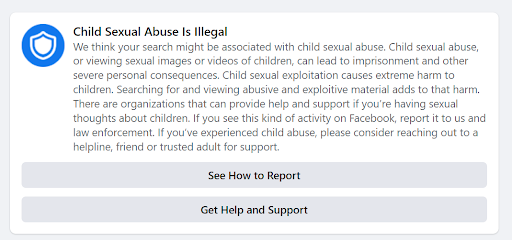

In February last year, the company introduced new features, mainly aimed at dissuading people from engaging with content of the sort.

When a user searches for terms associated with child exploitation, they receive a pop-up that tells them about the consequences of viewing illegal content and suggests ways to get help.

Users who share such content receive a security warning about the potential damage and the legal consequences of sharing such material.

But according to a whistleblower who provided documents to the U.S. Securities and Exchange Commission (SEC) in Oct. 2021, Facebook's response to child sexual abuse is "inadequate."

In testimony to the Commission, to which the BBC had access, the former Facebook employee (who kept their identity anonymous) said that the problem was not solved because they had not had "adequate assets dedicated to the problem," citing a small team that was eventually dismantled. The former employee also said that the company did not understand the scope of the real problem with child abuse because it "doesn't measure it."

In the statement given to the Commission, the employee also allegedly gave a warning about Facebook groups, which were described as "harm facilitators."

According to João Victor Archegas, a researcher at the Institute of Technology and Society (ITS-Rio), Facebook's repositioning towards the "virtual living room", to the detriment of the idea of a "public square", may have favored the proliferation of harmful content in groups.

With a focus on building communities and groups among people with common interests, Facebook had to make a trade-off, the researcher explained to Núcleo.

"To build a community with a specific interest, you have to give lots of moderation power to the administrator and the moderators of that specific group. Then they are the ones who will set the rules, define what can and cannot be said, what can and cannot be done within that specific group," Archegas said.

- Report it: you can report that content, group, or profile using the platforms' own reporting options, but it is important to report to an external body as well. SaferNet Brazil has an online reporting system.

- Don't share and don’t engage with the content. Even if the intention is to draw attention to the issue, by engaging you can increase its reach and may end up being held accountable for it.

DOUBLE STANDARDS

One thing that has become clear from the Facebook Papers is that Facebook does not devote the same efforts to countries in the Global South as it does to English-speaking countries, especially the United States.

In its transparency report, Meta says that between July and Sept. 2021, it proactively detected 98.9% of the actioned content for nudity and child sexual exploitation. The amount of content that received Facebook action dropped from five million in Q1 2021 to 1.8 million in Q3, a decrease the company attributed to improvements in detection technology.

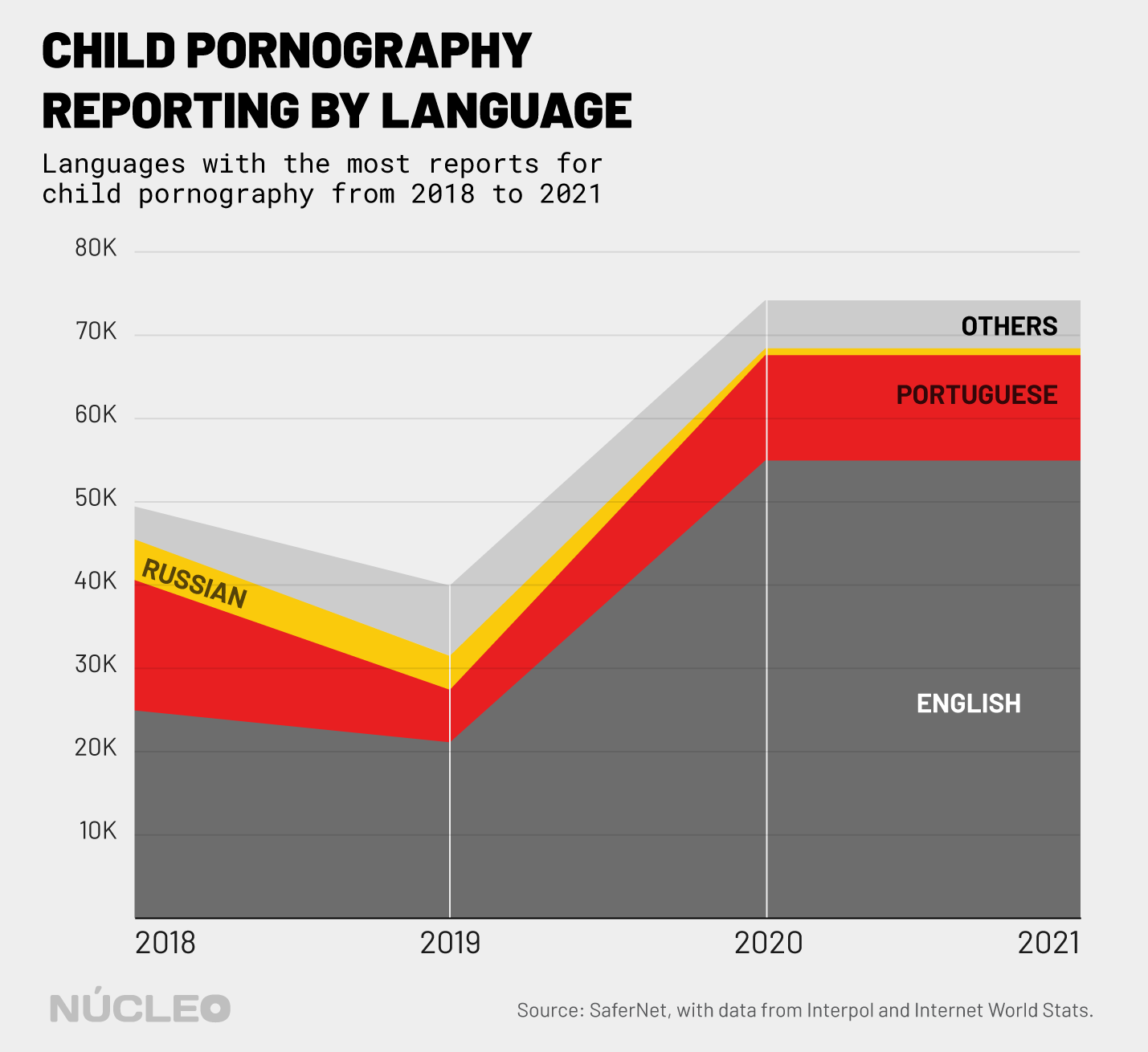

But the data voluntarily disclosed by the company illustrates a global scenario, with no country-by-country breakdown. Since English-language content usually receives the most attention from moderation, it is not possible to know how the platform acts to combat child sexual abuse in other languages.

For Archegas, it is possible that the issues regarding lack of moderation in languages other than English will impact the removal and inspection of child abuse and exploitation content.

This is a worrisome possibility, since the protection of children and teenagers in the Global South tends to be "sidelined", said Maria Mello, coordinator of the Children and Consumption program at Alana Institute, in an interview with Núcleo.

It is a good idea to call on platforms and understand if they are actually carrying out what they are promising.

- MARIA MELLO, OF THE ALANA INSTITUTE

"There is an issue that we always look at, which is the socioeconomic, regional inequality between countries. Often a practice, a policy that is announced globally takes longer to be implemented in countries of the Global South," explained Mello.

SHARED RESPONSIBILITY

It is everyone's duty to look after the integrity of children and teenagers, as established by the Child and Adolescent Statute. It is everyone's responsibility, not just the families', to monitor and report child sexual abuse and exploitation, including virtual abuse.

"We always stress how this is a responsibility that cannot be exclusive to the families. It needs to be shared by all of society, starting with the platforms, and the State, which will have to find ways to oversee these platforms’ practices," said Mello.

In 2021, SaferNet received 101,800 reports of child pornography through its reporting service. The number represented an increase of 3.65% compared to 2020 and it was the first time since 2011 that the entity received more than 100,000 reports.

According to SaferNet, such child pornography content was hosted on 53,900 web pages, of which 23,500 were removed.

HOW WE DID THIS

We came across these groups while doing other research, on ‘money slave’ fetish groups. On a post in one of these groups, there was a comment that suggested the existence of groups that focused on minors. We started searching for such groups. In our first attempt, using the term novinha (‘young chick’) we already found one group, the gravest of all.

Using an alternative profile (with no photo and newly created), we joined the public groups and asked to join private groups — all requests were answered in less than a day. Following the posts and discussions in these groups, we realized that there was a second type of group where criminal practices of this type were taking place: the dating groups.

Originally targeted at teens, and some limited to specific age groups (for example: "girls and boys ages 11 to 15"), these public groups, one with over 30,000 members, are a perfect lure for abusers. These spaces serve well for adults interested in soliciting minors, so much so that some young people comment on approaches of this kind and warn others to be careful about the people they talk to.

We saved screenshots of publications and links from the groups and, still during the research, we shared them with Facebook through its press office.

The data on reports of child pornography are from the Indicadores da Central Nacional de Denúncias de Crimes Cibernéticos (Indicators of the National Center for Cybercrime Reports), maintained by the NGO SaferNet, and can be found at this link.